Understanding and Analysing AB Testing Results

Understanding and Analysing AB Testing Results

29-01-2025 (Last modified: 21-05-2025)

29-01-2025 (Last modified: 21-05-2025)

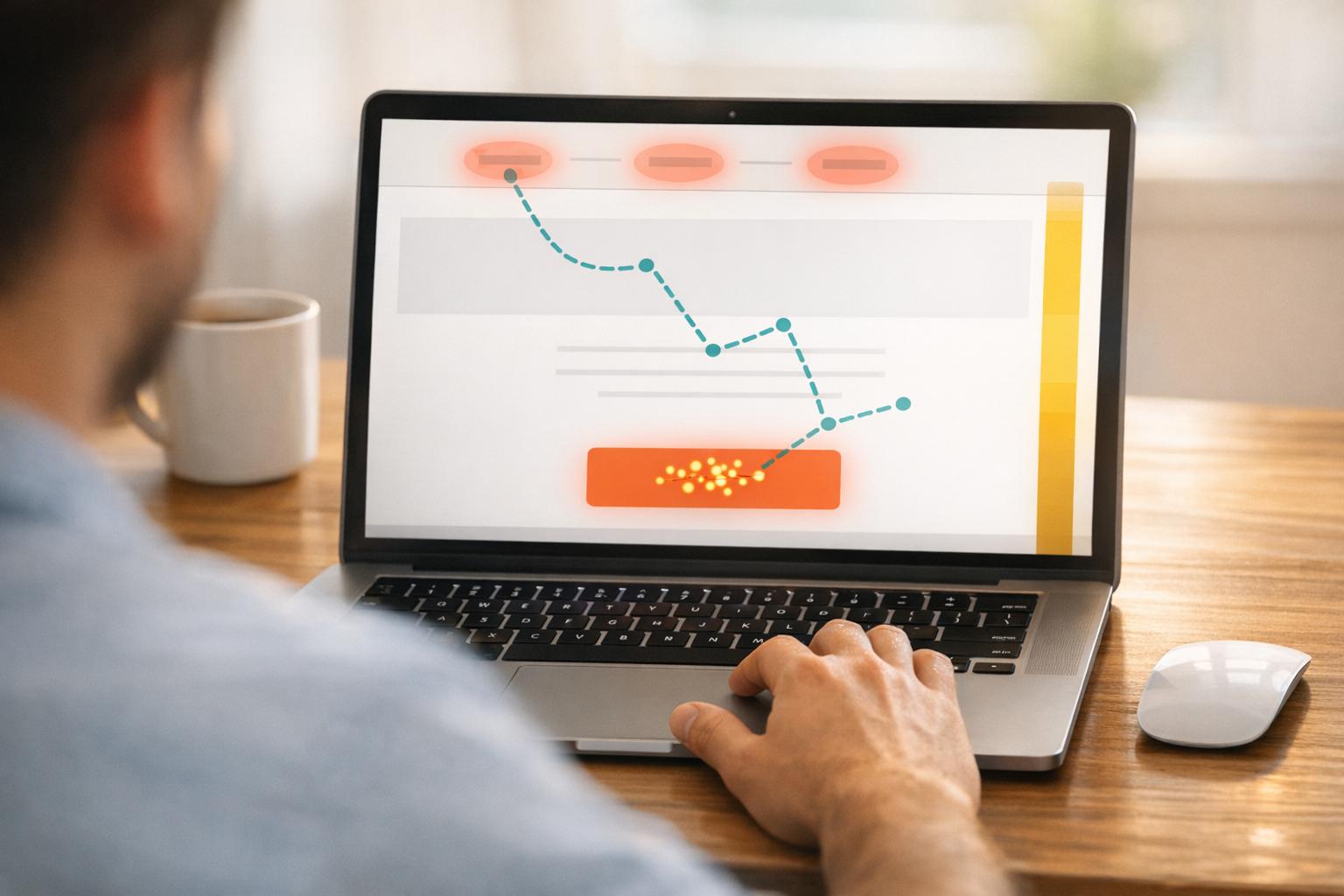

A/B testing is one of the most effective ways to optimize websites, landing pages, and marketing campaigns. But running an A/B test is only half the battle—the real value lies in interpreting the AB testing results correctly and using them to make data-driven decisions.

In this guide, we’ll break down the process of analyzing A/B testing results, why they matter, and how you can apply them to improve your marketing and user experience. Whether you’re new to A/B testing or looking to refine your skills, this article will provide everything you need to turn your test results into actionable insights.

What Are A/B Testing Results?

A/B testing results refer to the data collected after running an experiment comparing two (or more) versions of a webpage, email, or ad. These results help businesses determine which version performs better based on key metrics such as:

- Conversion Rate (e.g., sign-ups, purchases, downloads)

- Click-Through Rate (CTR) (e.g., how many users clicked a CTA button)

- Bounce Rate (e.g., how many users left without taking action)

- Time on Page (e.g., how long users stayed on each version)

By analyzing these metrics, you can identify which version leads to better engagement and ultimately contributes to business goals.

How to Analyze AB Testing Results

To get meaningful insights from your A/B testing results, follow these key steps:

Step 1: Ensure Statistical Significance

One of the most common mistakes in A/B testing is ending a test too early. A test needs enough data to ensure results are statistically significant.

What is statistical significance? Statistical significance ensures that the difference between Version A and Version B is not due to chance. Most testing platforms (like PageTest.ai, Optimizely, and VWO) calculate this for you, but you can also use an A/B testing significance calculator.

Rule of thumb:

- Aim for at least 95% statistical confidence before drawing conclusions.

- The longer you run a test, the more reliable your results will be.

Step 2: Compare Conversion Rates

The first thing to check in your A/B testing results is the conversion rate.

- If Version B has a higher conversion rate than Version A, it is likely the better choice.

- If the difference is minor, you may need more data or a bigger sample size.

Example:

- Version A (original landing page) conversion rate: 4.2%

- Version B (new design) conversion rate: 5.5%

- Improvement: 31% increase in conversions

Since the increase is substantial and statistically significant, you can confidently implement Version B.

Step 3: Analyze Secondary Metrics

Conversion rate is crucial, but other metrics can provide deeper insights:

- Bounce Rate: A lower bounce rate indicates better user engagement.

- Time on Page: Longer time on page suggests users find the content more engaging.

- Click-Through Rate (CTR): A higher CTR means more users are interacting with your content.

Example:

- Version A: 70% bounce rate vs. Version B: 50% bounce rate → Version B keeps users engaged longer.

- Version A: 1:30 average time on page vs. Version B: 2:15 average time on page → Version B’s design is more effective.

Step 4: Segment Your Results by Audience

Not all users behave the same way. Segmenting your A/B testing results by audience type can reveal important insights:

- Device Type: Do mobile users react differently than desktop users?

- Traffic Source: Are organic visitors behaving differently than paid traffic?

- New vs. Returning Users: Is the variation more effective for new visitors or loyal customers?

Example: If Version B outperforms Version A on desktop but not on mobile, you may need to optimize the mobile experience further.

Step 5: Identify Patterns and Trends

A single test is useful, but long-term trends provide deeper insights. Keep track of testing results over multiple tests to identify consistent patterns in user behavior.

Example: If multiple tests show that large, bold CTAs increase conversions, you can apply this insight across future campaigns.

Common Pitfalls When Interpreting Results

Even experienced marketers can make mistakes when analyzing testing results. Here are some common pitfalls to avoid:

1. Stopping the Test Too Early

Without enough data, you risk making decisions based on chance rather than meaningful differences.

2. Ignoring Statistical Significance

If a result isn’t statistically significant, implementing changes based on it can be risky.

3. Not Testing for Long Enough

Seasonality, traffic fluctuations, and random variance can impact test results. Ensure tests run for at least one to two weeks (or more for low-traffic sites).

4. Focusing Only on One Metric

Optimizing only for conversions might lead to unintended consequences, such as a higher bounce rate or reduced user satisfaction.

5. Failing to Consider External Factors

A sudden spike in traffic from a sale or social media campaign can skew your results. Always account for anomalies when analyzing data.

How to Apply A/B Testing Results to Your Strategy

Once you’ve successfully analyzed your testing results, it’s time to apply them to optimize your strategy. Here’s how:

1. Implement the Winning Variation

If one version clearly outperforms the other, roll out the winning design or strategy across your website or campaign.

2. Document and Learn

Keep a record of test results, including what was tested, key findings, and actionable insights. This will help refine future tests and avoid repeating experiments.

3. Run Follow-Up Tests

A/B testing is an ongoing process. Once you’ve identified a winning variation, test further refinements to maximize performance.

Example:

- If a new headline increases conversions, test additional CTA placements to see if engagement can improve further.

4. Apply Insights Across Channels

Insights from A/B testing can be applied to:

- Email marketing (e.g., subject lines, CTA buttons)

- Social media ads (e.g., images, ad copy)

- Product pages (e.g., descriptions, layouts)

Final Thoughts: Making the Most of Your Results

Understanding and analyzing A/B testing results is crucial for making data-driven decisions in marketing and UX optimization. By following best practices—ensuring statistical significance, analyzing multiple metrics, and segmenting data—you can gain valuable insights that drive real business growth.

Key Takeaways: ✔ Always let your tests run long enough for meaningful data. ✔ Look beyond conversion rates—analyze bounce rates, CTR, and user behavior. ✔ Segment your audience to uncover deeper insights. ✔ Apply findings strategically and continue refining through follow-up tests.

By mastering the interpretation of A/B testing results, you can optimize your digital strategy, improve user experience, and boost conversion rates with confidence. Happy testing!

Q&A: Interpreting A/B Testing Results

What are A/B testing results?

They’re the data collected after comparing two versions of a webpage or campaign to see which performs better.

How do I know if my A/B test is successful?

Check if your results are statistically significant (typically 95% confidence) and show a meaningful improvement in your key metric.

What metrics should I look at?

Focus on conversion rate, bounce rate, time on page, and click-through rate for a fuller picture.

Why segment A/B testing results?

Different audiences (mobile vs desktop, new vs returning users) may respond differently to variations.

What if results aren’t conclusive?

Don’t panic—use the data to refine your hypothesis and run a follow-up test.

say hello to easy Content Testing

try PageTest.AI tool for free

Start making the most of your websites traffic and optimize your content and CTAs.

Related Posts

26-02-2026

26-02-2026

Ian Naylor

Ian Naylor

How AI Creates Personalized Email Content

AI uses user data to build dynamic email templates, generate personalized variants, and run continuous tests to boost engagement and conversions.

24-02-2026

24-02-2026

Ian Naylor

Ian Naylor

Mouse Tracking Data: What to Measure

Track clicks, hovers, scroll depth, and cursor movement to find frustration points, prioritize CTAs, and improve UX and conversion rates.

23-02-2026

23-02-2026

Ian Naylor

Ian Naylor

Top 10 CRO Tips for Web Page Design

Practical web design CRO tips — value props, CTAs, speed, mobile, visuals, trust, short forms and A/B testing to lift conversions without extra traffic.