Best Practices for AI Integration in Cross-Platform Testing

Best Practices for AI Integration in Cross-Platform Testing

01-10-2025 (Last modified: 03-11-2025)

01-10-2025 (Last modified: 03-11-2025)

AI is transforming cross-platform testing by automating repetitive tasks, improving accuracy, and speeding up workflows. This ensures applications work consistently across devices, operating systems, and browsers while saving time and resources.

Key Takeaways:

- AI tools automate tasks like regression testing, visual testing, and test data generation.

- Machine learning detects performance issues, functionality gaps, and UI inconsistencies.

- Businesses benefit from faster release cycles, reduced costs, and better defect detection.

- Combining AI automation with manual testing ensures thorough results.

- Features like no-code test creation, AI-generated content variations, and performance tracking simplify testing.

Challenges to Address:

- Device and platform fragmentation.

- Performance discrepancies across environments.

- Maintaining UI/UX consistency and accessibility.

Pro Tip: Use AI-powered tools like PageTest.AI to manage tests without coding, generate content variations, and track performance metrics efficiently. Balance automation with human oversight for the best results.

Cross-Platform App Testing Just Got Stupid Simple with AI

Best Practices for AI Integration in Cross-Platform Testing

To make the most of AI in cross-platform testing, it’s important to strike a balance between automation and human oversight. By following these strategies, teams can use AI to streamline workflows while ensuring high-quality and accurate testing results across all platforms.

Automating Test Execution with AI

At the heart of effective AI integration is automation that adapts seamlessly to different environments. AI-driven tools can adjust to various screen sizes, operating systems, and browser behaviors without needing separate test scripts for each platform.

Start by targeting repetitive tasks that take up the most time. Regression testing is a prime example. It involves running the same test cases across multiple platforms after updates. AI can handle these tests simultaneously across devices and browsers, slashing testing times from days to just hours.

Visual regression testing is another area where AI shines. These tools can differentiate between meaningful changes that impact the user experience and minor, irrelevant differences, like slight font variations.

AI also simplifies content optimization. Tools like PageTest.AI offer no-code solutions for creating, testing, and tracking variations in headlines, button texts, and call-to-action placements across devices – saving time and effort.

Additionally, test data management benefits greatly from automation. Instead of manually creating datasets for different platforms, AI can generate realistic, platform-specific scenarios, ensuring thorough testing.

While AI is excellent for these tasks, it’s vital to incorporate manual testing to address areas where human judgment is crucial, such as exploratory testing and user experience.

Combining AI Automation with Manual Testing

AI may excel at repetitive tasks, but human insight is irreplaceable when it comes to exploring user experience and unexpected scenarios. The most effective testing strategies combine AI’s precision and speed with human creativity and intuition.

By automating basic functionality and performance tests, manual testers can focus on more complex user journeys and edge cases. Humans are better equipped to explore how users might interact with an application in ways that AI can’t predict.

This approach works particularly well for usability testing. AI can quickly pinpoint technical issues like broken links or slow load times, while human testers evaluate whether the interface is intuitive and navigation flows make sense.

AI-assisted manual testing can further enhance this process. For example, AI tools can provide real-time feedback as testers explore an application, flagging issues like memory spikes or sluggish performance on specific devices.

To make this collaboration effective, define clear roles. Let AI handle tasks requiring consistency and scale, while humans focus on subjective evaluations and creative problem-solving. This ensures thorough testing without unnecessary overlap.

In addition to blending AI and manual testing, it’s crucial to maintain broad and rigorous coverage across all user environments.

Achieving Complete Test Coverage

To ensure thorough test coverage, you need to address both widely used and emerging platforms, as well as edge cases.

AI can analyze traffic patterns to prioritize the most relevant device-browser combinations. This eliminates the need to manually test every possible scenario and focuses efforts on the most impactful areas.

Network condition testing is another critical aspect. AI can simulate different network speeds, connection stabilities, and bandwidth limitations, which are especially important for mobile users who may face slower connections compared to desktop users.

Progressive enhancement testing is equally essential. AI can evaluate how your application performs when certain features aren’t supported, when JavaScript is disabled, or when older browser versions are used. This ensures that all users, regardless of their platform, have access to a functional experience – even if advanced features aren’t available.

For ongoing reliability, implement continuous cross-platform monitoring. AI can constantly assess your application’s performance across various environments, catching issues caused by third-party updates, new browser versions, or changing platform behaviors.

Lastly, don’t overlook accessibility testing. Different operating systems and browsers handle assistive technologies in unique ways. AI can automatically test for keyboard navigation, screen reader compatibility, and color contrast to ensure your application is accessible to all users, no matter their device or platform.

Key Features to Look for in AI Testing Tools

When choosing an AI-powered cross-platform testing tool, it’s essential to focus on features that simplify workflows while delivering precise, actionable insights across devices. These core capabilities ensure your testing process is both efficient and impactful.

No-Code Test Creation and Management

Creating and managing tests without writing a single line of code is a game-changer for teams aiming to move quickly. No-code platforms empower team members from various backgrounds to design and execute complex tests without relying on developers.

The best tools offer visual test builders that allow you to point, click, and select elements for testing. These interfaces make it easy to adjust headlines, buttons, images, and other components directly through a browser extension or web-based platform.

Take PageTest.AI, for example. It provides a Chrome extension that enables users to instantly select elements and set up tests. This means you can test headlines, call-to-action buttons, product descriptions, and more – all without needing technical expertise. Such tools are particularly useful for cross-platform testing, as they integrate seamlessly with website builders like WordPress, Wix, and Shopify.

A centralized dashboard is another must-have feature. It helps you monitor, pause, and organize tests by website, campaign, or team, which is especially helpful when juggling multiple cross-platform experiments.

Building on this ease of use, advanced AI tools also streamline content variation.

AI-Generated Content Variations

Manually creating A/B test content is tedious and often limited in scope. AI-generated content variations address this challenge by automatically producing multiple versions of your content, using principles proven to drive engagement and conversions.

Top-tier AI testing tools analyze your existing content and generate variations that experiment with different messaging strategies, psychological triggers, and writing styles. Instead of simply swapping out a few words, these tools craft fundamentally different approaches to test a broader range of ideas.

For instance, an advanced tool might generate both a headline emphasizing urgency and another highlighting benefits, giving you diverse angles to test. These tools also factor in audience psychology, industry norms, and conversion optimization techniques to create variations that resonate with your target audience.

For cross-platform testing, this feature shines even brighter. AI can adapt content for different screen sizes and formats, ensuring a headline that works on desktop also performs well on mobile. By automatically generating platform-specific versions, these tools save time and improve test accuracy.

Performance Tracking and Analytics

Creating tests is only half the battle – understanding their performance is where the real value lies. Robust analytics capabilities are essential for evaluating results and refining strategies across platforms.

Your AI testing platform should go beyond simple conversion rates. Look for tools that track a variety of metrics, such as click-through rates, engagement levels, scroll depth, and user behavior. These insights help you understand not just what works, but why it works. For cross-platform testing, analytics should also segment performance by device type, operating system, and browser.

Real-time reporting is another critical feature. Tools that update metrics continuously allow you to identify trends early and make adjustments without waiting for overnight data processing. This can significantly enhance the efficiency of your testing cycles.

The analytics interface should present data in clear, easy-to-read charts, ideally with indicators for statistical significance. For businesses in the U.S., ensure the platform uses familiar formats, like MM/DD/YYYY for dates and standard measurement units.

Exporting data is another often-overlooked feature. Whether you need to share results with stakeholders or integrate testing data into other business intelligence tools, having options like CSV exports, API access, or direct integrations with analytics platforms is invaluable.

Additionally, cross-platform performance tracking should include technical metrics such as page load times, rendering speeds, and compatibility across browsers and devices. These details help pinpoint whether performance issues are due to platform-specific limitations or content-related factors.

sbb-itb-6e49fcd

Common Challenges in AI-Driven Cross-Platform Testing

AI has transformed cross-platform testing by automating many tedious processes, but it also brings its own set of challenges. Tackling these obstacles is essential to effectively integrate AI into testing workflows. By understanding these issues ahead of time, teams can craft better strategies and sidestep common pitfalls that could disrupt their testing efforts.

Managing Device and Platform Fragmentation

The sheer variety of devices and operating systems makes comprehensive testing a complex task. When resources are tight, prioritizing devices becomes crucial. AI tools can assist by analyzing user traffic data to pinpoint the most relevant device-platform combinations for your audience.

Screen resolution differences add another layer of complexity. For instance, a button that looks perfect on a desktop monitor might be too small on a smartphone. AI-powered responsive testing tools can simulate how content renders across different screen sizes, flagging potential issues before they affect users.

Then there’s the challenge of browser compatibility. Browsers like Chrome, Safari, Firefox, and Edge interpret CSS, JavaScript, and HTML differently. A feature that works seamlessly in one browser might break in another due to subtle rendering differences. AI testing platforms address this by running simultaneous tests across multiple browsers, identifying inconsistencies in real time.

Older devices with limited memory and processing power can also cause performance bottlenecks. These issues may not surface during desktop testing but can significantly impact user experience. AI tools can simulate these constraints by throttling CPU and memory during tests, helping teams understand how applications perform under resource-limited conditions.

These fragmentation challenges often lead to performance differences that vary across platforms.

Fixing Performance Discrepancies

Performance issues don’t always appear uniformly across platforms. A website that loads quickly on a desktop might take much longer to load over a mobile network, creating a frustrating experience for users.

Simulating network conditions is a key strategy to uncover these differences early. AI-powered testing tools can mimic a range of connection speeds – from fast broadband to slower mobile networks – revealing how load times vary. This is especially critical for businesses catering to users in regions with limited internet access.

Oversized images, conflicting scripts, and third-party integrations like social media widgets or analytics tags can also cause slowdowns. AI tools can monitor these elements, track their impact on performance, and alert teams when they introduce delays.

Caching behavior is another factor that varies by platform and can influence how quickly returning users access content. AI tools can analyze user behavior to optimize caching strategies tailored to specific platforms, ensuring faster load times for repeat visitors.

While performance is vital, ensuring a seamless and accessible user experience across platforms is equally important.

Maintaining UI/UX Consistency and Accessibility

Delivering a consistent UI/UX across devices requires careful attention to detail, as even small rendering differences can disrupt the user experience. Variations in font rendering, color accuracy, and spacing between devices can result in a fragmented brand presentation.

Font fallback issues are a common culprit, causing layout shifts when custom fonts fail to load correctly on certain devices. Similarly, color accuracy can differ across displays, potentially impacting brand perception. AI-driven visual regression testing can catch these problems, simulating how designs appear on various devices and identifying unexpected font or layout changes.

On mobile platforms, touch target sizing is critical. Buttons designed for mouse clicks might be too small for touch navigation, frustrating users. AI accessibility testing can detect touch targets that fall below recommended sizes and suggest adjustments to improve usability.

Keyboard navigation is another crucial aspect, particularly for users relying on assistive technologies. AI tools can simulate keyboard-only navigation to identify elements that may be inaccessible without a mouse, ensuring a consistent experience for all users.

Content overflow is yet another challenge. Text that fits neatly on a desktop screen might get cut off or require horizontal scrolling on smaller devices. AI-powered layout testing can analyze how content behaves across different screen sizes and recommend changes to improve responsiveness.

Finally, maintaining consistent loading states across platforms is essential for a smooth user experience. Users expect clear feedback when content is loading, but different platforms may handle loading indicators inconsistently – or skip them entirely. AI tools can verify uniformity in loading states, ensuring users receive the same feedback regardless of device or platform.

Conclusion and Key Takeaways

Integrating AI into cross-platform testing can drastically improve efficiency, cutting release times by up to 60% and reducing flaky tests by 50%. These advancements not only save time but also enhance the reliability of your testing processes.

To fully capitalize on these benefits, it’s crucial to adopt a balanced approach. Start by prioritizing high-impact user journeys and leveraging analytics to identify the devices and platforms that matter most for your audience. By focusing on critical areas first and expanding coverage methodically, you can ensure your testing efforts deliver the best possible return on investment.

No-code AI testing platforms, like PageTest.AI, are breaking down technical barriers, making cross-platform testing more accessible. For example, PageTest.AI offers features like AI-generated test variations and performance tracking, enabling teams to execute comprehensive testing strategies faster and with fewer resources.

Cloud-based AI testing solutions further simplify the process by reducing the reliance on physical device labs and manual oversight. This shift accelerates time-to-market while improving the overall user experience. By prioritizing tests intelligently, teams can focus their energy on areas that have the most significant impact, eliminating the need for extensive physical device setups.

"AI and automation are vital for managing complex workflows and fast delivery cycles. AI-driven platforms enable QA teams to author, execute, and manage tests at speed and scale, without requiring programming expertise," says Yuvarani Elankumaran, a technical consultant at ACCELQ.

While challenges like device fragmentation, performance inconsistencies, and UI/UX discrepancies still exist, AI-powered strategies are designed to address these issues systematically. Success lies in understanding these challenges from the outset and tailoring your testing strategies to tackle them effectively.

As you move forward, keep an eye on key metrics like test coverage, execution time, defect leakage, and flaky test rates. These metrics provide valuable insights for continuous improvement. Align your strategies with these performance indicators to refine your testing approach over time. Remember, the goal of AI in cross-platform testing isn’t to replace human judgment – it’s to enhance your team’s ability to deliver better software, faster.

FAQs

How does AI combine automation with human oversight to improve cross-platform testing outcomes?

AI streamlines cross-platform testing by taking over repetitive tasks such as analyzing application performance, creating test scenarios, and spotting potential issues. This approach not only speeds up the process but also broadens the scope of testing across different platforms.

That said, human involvement remains critical. Experts are needed to validate the insights AI provides, fine-tune training data, and tackle complex or subtle challenges that machines might miss. By combining AI’s speed and precision with human judgment and expertise, testing becomes more reliable, ethical, and aligned with project objectives. This collaboration strikes the perfect balance for achieving effective results.

How does AI help ensure consistent UI/UX across different devices and platforms?

AI significantly contributes to maintaining a consistent UI/UX experience across various devices and platforms. By automating design updates and synchronizing key elements like design tokens, components, and style guides, it reduces the need for manual adjustments. This ensures a unified appearance and functionality across all platforms.

Moreover, AI uses pattern recognition and predictive analysis to dynamically adjust interfaces based on user behavior and context. This adaptability not only minimizes inconsistencies but also improves usability, delivering a smooth and cohesive experience no matter the device or platform in use.

How can AI-generated content variations improve A/B testing in cross-platform workflows?

AI-generated content variations make A/B testing across multiple platforms faster and more efficient. By quickly creating multiple content options tailored to specific audience segments, teams can test ideas at a much quicker pace. This helps pinpoint what resonates best with users in less time.

On top of that, AI offers real-time optimization and a deeper understanding of user behavior. This means teams can make more precise decisions, leading to better engagement and improved conversion rates. The result? A more personalized and impactful experience for users across different platforms.

Related Blog Posts

say hello to easy Content Testing

try PageTest.AI tool for free

Start making the most of your websites traffic and optimize your content and CTAs.

Related Posts

16-02-2026

16-02-2026

Ian Naylor

Ian Naylor

How Cognitive Load Impacts Conversion Rates

Reduce cognitive load with simpler layouts, clearer CTAs, and fewer choices to cut friction, improve UX, and lift conversion rates—backed by tests and metrics.

14-02-2026

14-02-2026

Ian Naylor

Ian Naylor

Ultimate Guide To SEO Conversion Metrics

Measure how organic traffic converts into leads and revenue. Learn key metrics, GA4 setup, Value Per Visit, CLV, and optimization tactics.

12-02-2026

12-02-2026

Ian Naylor

Ian Naylor

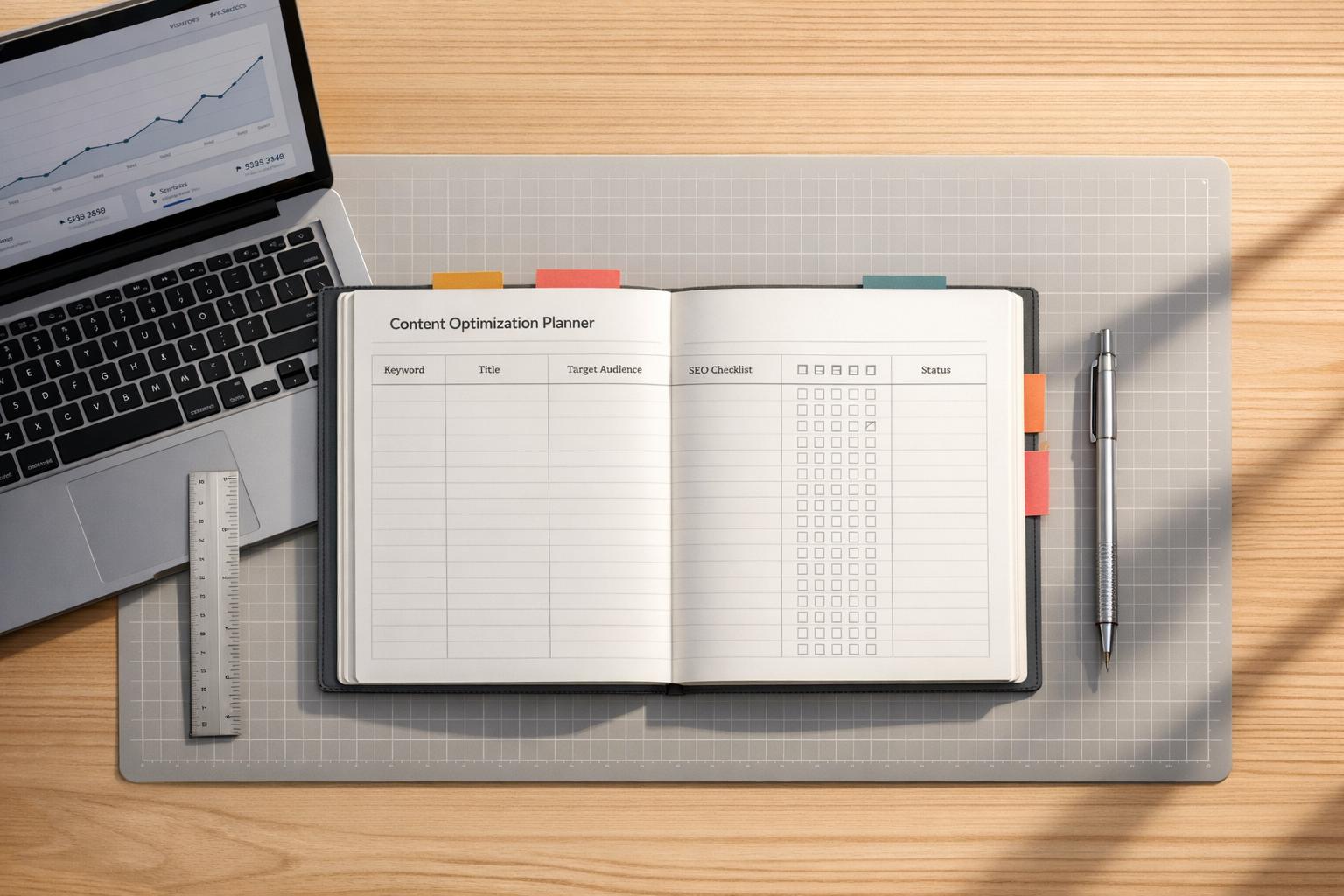

SEO Content Optimization Planner

Create search-friendly content with our SEO Content Optimization Planner. Get a custom plan to rank higher—try it free today!