How to Perform an A/B Test: A Step-by-Step Guide

How to Perform an A/B Test: A Step-by-Step Guide

21-01-2025 (Last modified: 21-05-2025)

21-01-2025 (Last modified: 21-05-2025)

Introduction

A/B testing is one of the most powerful tools in your optimization toolbox. It allows you to make data-driven decisions by comparing two versions of a webpage, email, or app feature to determine which performs better. In this article, we’ll walk you through how to perform an A/B test step by step, complete with examples and actionable insights. Let’s get started!

Step 1: Define Your Goal

The first step in learning how to perform an A/B test is to clearly define what you want to achieve. Without a specific goal, your test results may lack direction and meaning.

Examples of Goals:

- Increase conversions: Test changes to your call-to-action (CTA) to boost sign-ups.

- Improve click-through rates: Experiment with subject lines in your email campaigns.

- Reduce bounce rate: Adjust homepage elements to keep visitors engaged longer.

Step 2: Develop a Hypothesis

A solid hypothesis gives your test purpose. Your hypothesis should outline what you’re testing, why, and what you expect to happen.

Example Hypothesis:

- “Changing the CTA button color from blue to orange will increase conversions by 10% because orange is more attention-grabbing.”

When forming your hypothesis, focus on a single variable. Testing too many changes at once can make it difficult to determine what influenced the results.

Step 3: Identify Your Variables

To conduct a successful A/B test, you’ll need two main components:

- Control (Version A): The current version of your webpage or element.

- Variation (Version B): The modified version with a single change.

Examples of Variables:

- Headlines: “Limited Time Offer!” vs. “Exclusive Deal for You!”

- Images: A lifestyle photo of someone using your product vs. a product-only image.

- CTA Text: “Get Started Now” vs. “Sign Up Free.”

Step 4: Determine Your Sample Size

A common mistake in A/B testing is running tests with too few participants. To ensure accurate results, calculate the sample size you’ll need based on your:

- Baseline conversion rate.

- Minimum detectable effect (the smallest improvement you want to measure).

- Desired confidence level (typically 95%).

Tools to Calculate Sample Size:

- PageTest.ai: Simplifies sample size calculations.

- Optimizely: Provides built-in sample size calculators.

Step 5: Split Your Audience

For an unbiased test, divide your audience randomly and evenly between the control and variation. Most A/B testing tools handle this automatically, ensuring visitors are evenly distributed.

Example:

- If you have 10,000 visitors, 5,000 see Version A, and 5,000 see Version B.

Step 6: Run the Test

Once your test is set up, it’s time to let it run. Ensure that you:

- Run the test for a full cycle: Include enough days to account for daily variations in user behavior.

- Monitor but don’t interfere: Resist the temptation to stop the test early, even if one version appears to be winning.

Recommended Test Duration:

- At least one to two weeks, depending on your traffic volume and the significance level you aim to achieve.

Step 7: Analyze Your Results

After your test concludes, analyze the data to determine which version performed better. Most A/B testing tools provide detailed reports with metrics like:

- Conversion rates

- Click-through rates

- Statistical significance

Example:

- Version A: 3% conversion rate (150 conversions out of 5,000 visitors).

- Version B: 4.5% conversion rate (225 conversions out of 5,000 visitors).

- Statistical significance: P-value < 0.05, indicating that Version B is the clear winner.

Step 8: Implement the Winning Variation

Once you’ve identified the better-performing version, it’s time to make it the default. Use your insights to inform future tests and further refine your strategies.

Step 9: Iterate and Test Again

Optimization is an ongoing process. Use the results of your test to form new hypotheses and keep testing.

Example:

- After improving your CTA, test the headline to see if further improvements can be made.

Pro Tips for Successful A/B Testing

- Test One Variable at a Time: Focus on a single change to avoid confusion.

- Prioritize High-Impact Areas: Start with elements like CTAs, headlines, and landing pages.

- Segment Your Audience: Analyze results by demographics or device types for deeper insights.

- Document Your Tests: Keep a record of what you tested, your results, and any lessons learned.

- Use Reliable Tools: Tools like PageTest.ai and Optimizely simplify the process and provide accurate data.

Common Mistakes to Avoid

- Stopping Tests Early: Premature conclusions can lead to incorrect decisions.

- Testing Too Many Changes: Focus on one variable per test.

- Ignoring Statistical Significance: Ensure your results are meaningful and not due to chance.

Tools for A/B Testing

- PageTest.ai: Perfect for beginners and advanced users. AI-powered and free up to 100,000 tests per month.

- Google Optimize Alternatives: Like VWO or Optimizely for more advanced testing.

- Hotjar: Use heatmaps to understand user behavior before testing.

Conclusion

Now that you know how to perform an A/B test, you have the tools and knowledge to start optimizing your website, email campaigns, and more. Remember, A/B testing is a cycle of experimentation and learning. By following this guide and testing strategically, you’ll be well on your way to driving better results and making data-backed decisions. Happy testing!

Q&A: Performing an A/B Test the Right Way

- What is the first step to perform an A/B test?

Start by defining a clear, measurable goal—such as increasing conversions, improving click-through rates, or reducing bounce rate. Without a goal, your test won’t have direction. - How do I write a good A/B testing hypothesis?

A solid hypothesis should identify what you’re changing, why, and what result you expect. For example: “Changing the CTA color to orange will increase clicks because it draws more attention.” - What’s the difference between control and variation in A/B testing?

The control (Version A) is your current version, while the variation (Version B) includes one change you’re testing. This helps isolate the effect of that specific change. - How can I determine the right sample size for my A/B test?

Use tools like PageTest.ai or Optimizely’s calculators. Your sample size depends on your current conversion rate, the minimum change you want to detect, and your confidence level (usually 95%). - Why is audience split important in A/B testing?

Randomly splitting your audience ensures that each version gets equal exposure and that your results aren’t biased by who sees which version. - How long should I let my A/B test run?

Most tests should run for at least one to two weeks to account for daily and weekly fluctuations. Ending too soon may lead to inaccurate results. - What metrics should I analyze after running an A/B test?

Focus on key metrics like conversion rates, click-through rates, and statistical significance (often via p-values). These show which version truly performed better. - What happens after I identify the winning variation?

You implement the winning version as the new default and use the insights to inform future tests. Optimization should be a continuous process. - Can I test multiple changes at once?

Not in a standard A/B test. Stick to one variable per test to pinpoint what caused the change. If you want to test multiple elements, consider multivariate testing instead. - What are the best tools to run an A/B test?

PageTest.ai is a great starting point—AI-powered, simple to use, and free up to 100,000 tests/month. Other solid options include Optimizely, VWO, and Hotjar for pre-test user behavior insights.

say hello to easy Content Testing

try PageTest.AI tool for free

Start making the most of your websites traffic and optimize your content and CTAs.

Related Posts

03-02-2026

03-02-2026

Ian Naylor

Ian Naylor

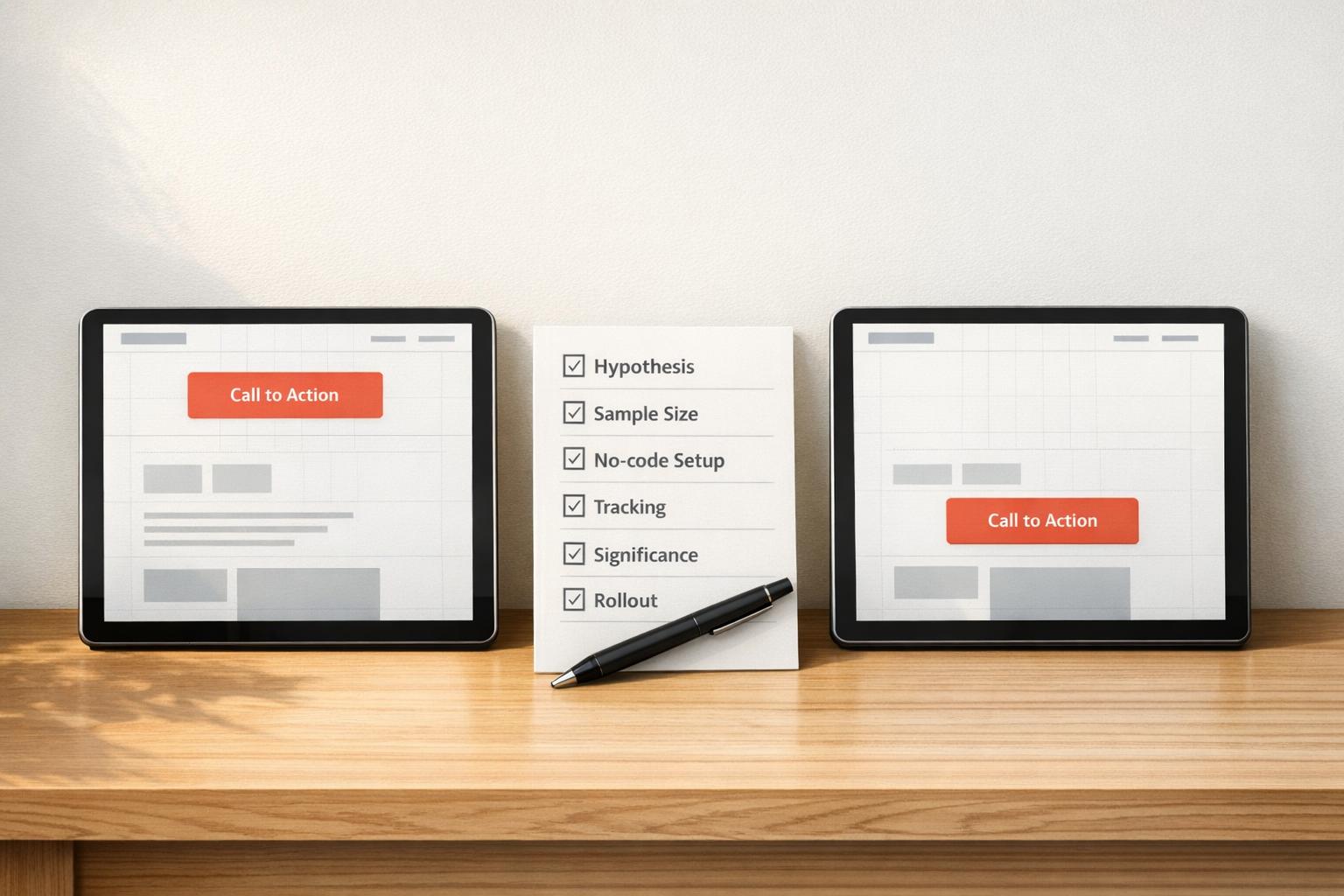

Checklist for A/B Testing CTA Placement

A step-by-step checklist for A/B testing CTA placement: hypothesis, sample size, no-code setup, tracking, significance, and rollout to boost conversions.

02-02-2026

02-02-2026

Ian Naylor

Ian Naylor

Best Practices for AI Competitor Analysis in 2025

Set goals, track AI-search competitors, run keyword and content gap analysis, use AI-driven SWOT, and monitor real-time market changes to gain an edge in 2025.

31-01-2026

31-01-2026

Ian Naylor

Ian Naylor

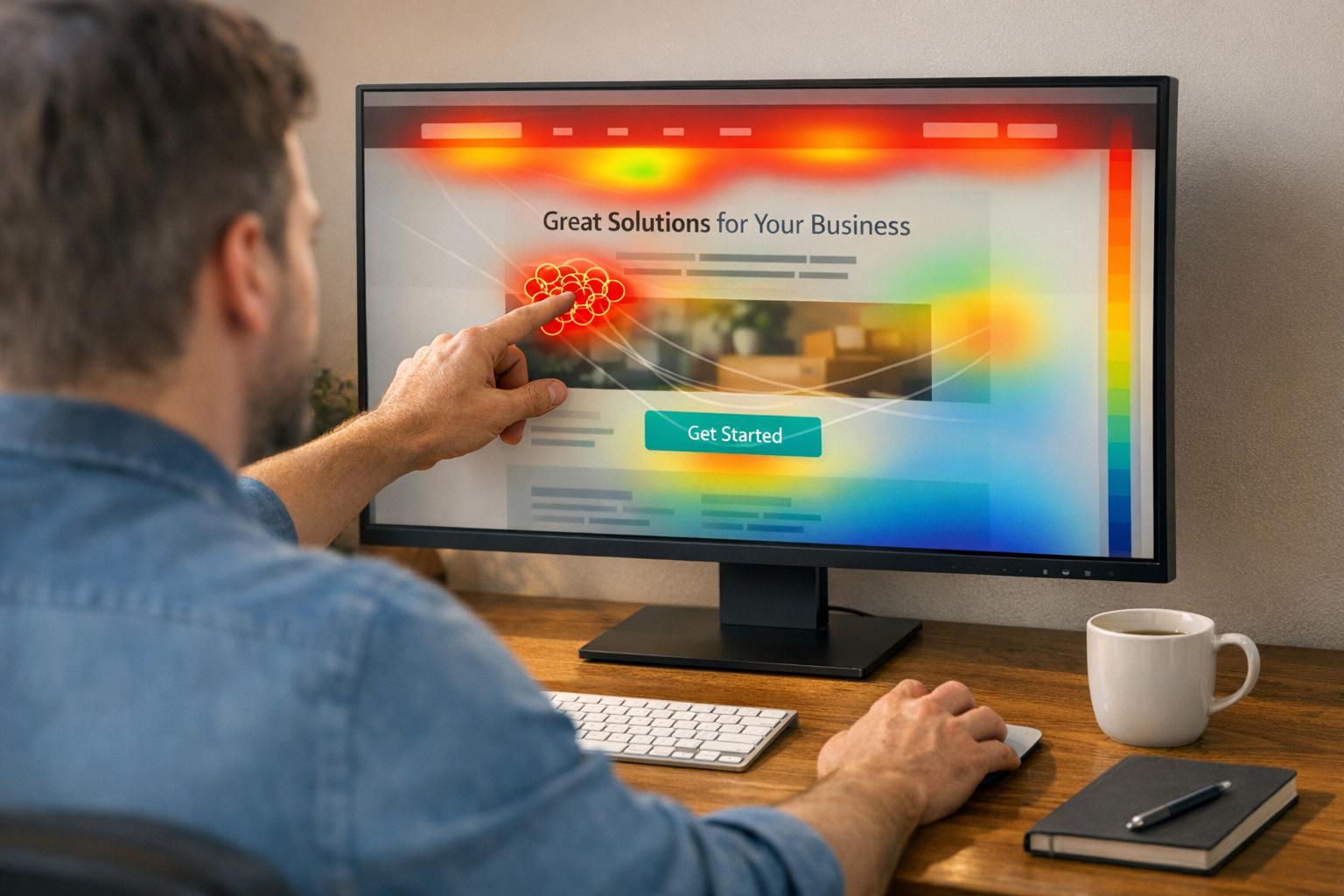

How To Read Heatmap Data For CRO

Interpret click, scroll, and mouse-movement heatmaps to spot hotspots, fix rage clicks, improve CTA placement, and lift conversions with data-driven changes.