AI in Real-Time Performance Monitoring

AI in Real-Time Performance Monitoring

22-01-2026 (Last modified: 22-01-2026)

22-01-2026 (Last modified: 22-01-2026)

Real-time performance monitoring is no longer a luxury – it’s a necessity for businesses navigating complex systems. AI has emerged as a key player, offering faster insights, predictive analytics, and automated solutions that traditional methods can’t match. Here’s what you need to know:

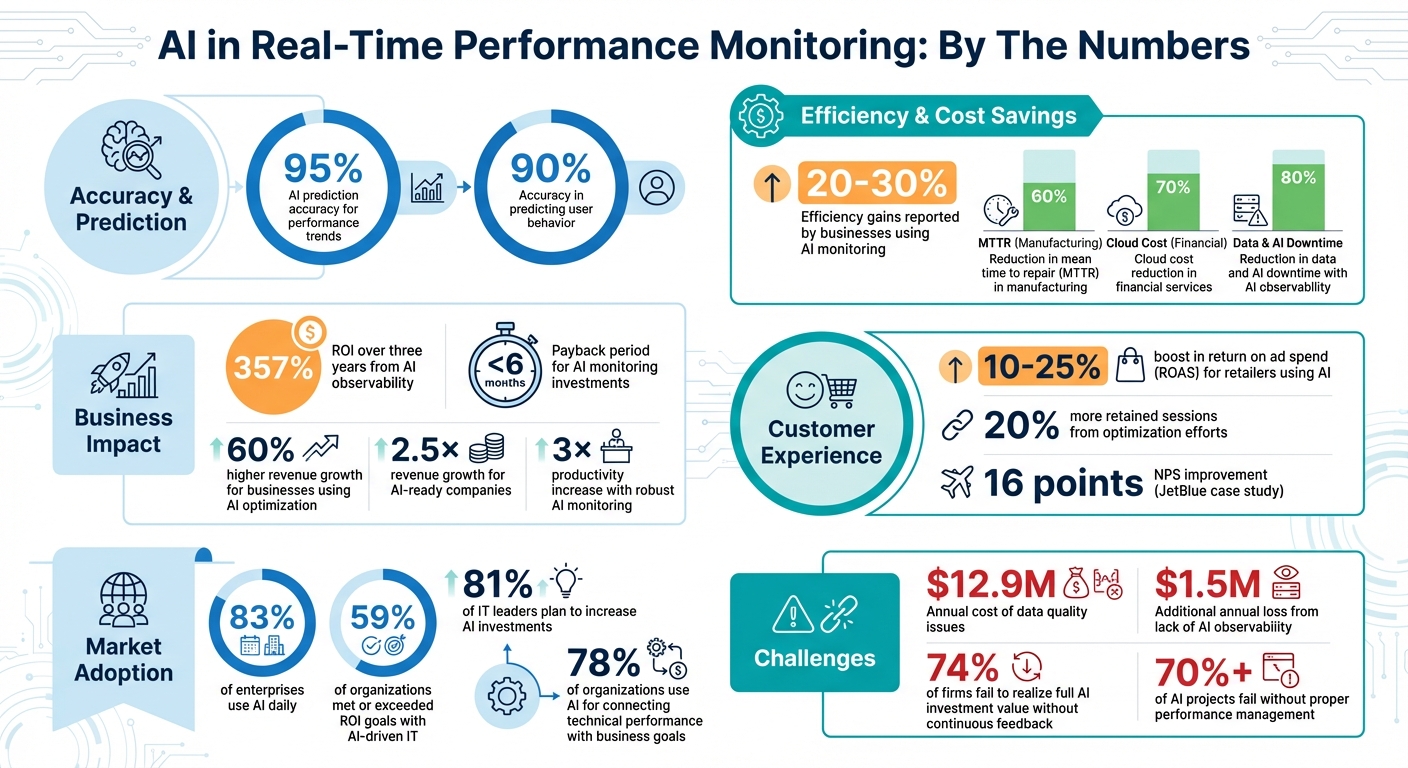

- AI predicts performance trends with up to 95% accuracy, reducing downtime and improving reliability.

- It uses adaptive thresholds to minimize false alarms and detect subtle anomalies.

- Predictive models forecast resource bottlenecks and user behavior, enabling smarter resource allocation.

- Businesses using AI for performance monitoring report 20-30% efficiency gains and higher ROI.

AI-driven tools integrate seamlessly into workflows, combining real-time data with automation to deliver actionable insights. From preventing outages to boosting website conversions, AI is reshaping how businesses handle performance.

If you’re looking to stay competitive, investing in AI-powered monitoring systems is a smart move. They not only solve issues faster but also prevent them from happening in the first place.

AI Performance Monitoring: Key Statistics and Benefits

AI Observability explained | Gain insight into your AI models and agents

Key Components of AI-Driven Monitoring Systems

AI has reshaped real-time monitoring by turning raw data into meaningful insights. Let’s break down the essential components that make these systems work.

Automated Data Collection and Processing

At the heart of AI-driven monitoring is data acquisition. Systems pull information from multiple sources, including telemetry logs, CPU and memory usage, network traffic, and IoT sensors. For example, industrial equipment might provide temperature or pressure readings. Tools like MSTICPY and Azure Monitor Query streamline this process by ingesting data via APIs, ensuring a continuous flow of information into the monitoring pipeline.

However, raw data is often messy. Automated preprocessing cleans and organizes it, extracting key attributes like averages or variances to highlight performance trends. Advanced techniques, such as time-series LSTM modeling, capture long-term patterns to detect subtle changes in system behavior. With the power of multi-core servers and GPUs, these systems can process and analyze data in real time.

This clean and structured data becomes the foundation for predictive insights.

Predictive Analytics and Anomaly Detection

Predictive analytics takes monitoring to the next level by forecasting trends and spotting irregularities. AI models, including LSTM networks, can predict performance with impressive accuracy – up to 95% in some cases. For anomaly detection, unsupervised algorithms like K-means and DBSCAN clustering identify unusual patterns without needing pre-labeled data. These methods help create adaptive baselines that adjust dynamically over time. In industrial environments, such as IoT monitoring, these approaches have proven highly accurate for parameters like temperature.

A standout example of AI’s potential is Microsoft’s “Argos” system, introduced in 2024. By leveraging Large Language Models, it improved anomaly detection F1 scores by 28.3% and sped up inference times by as much as 34.3 times.

Once predictive analytics and anomaly detection are in place, the next step is integrating these insights into everyday workflows.

Integration with Existing Tools and Workflows

For AI-driven monitoring to deliver its full value, it must fit seamlessly into existing systems. This starts with observability-by-design – embedding monitoring capabilities during the development phase. Open standards like OpenTelemetry ensure that telemetry data (logs, metrics, traces) can move across platforms without friction.

A practical example: configuring GitHub Actions to run test queries after builds. This setup can catch performance deviations early, stopping deployments before issues escalate.

In web performance monitoring, tools like PageTest.AI link real-time performance data with A/B testing, connecting technical metrics like load times directly to conversion rates. This approach aligns technical performance with business goals, a strategy that 78% of organizations now use AI for in at least one area of operations.

“This is exactly what we need [in our decision-making], connecting application performance and business outcomes.”

– Director of e-commerce, Budget Airline

Unified dashboards further enhance usability by combining AI-specific insights with traditional metrics. Alerts routed through familiar platforms like Slack or PagerDuty ensure quick action, making AI monitoring feel like a natural extension of existing workflows.

These components work together to help AI-driven systems improve performance metrics across a wide range of applications.

Use Cases and Benefits of AI in Real-Time Monitoring

AI-driven monitoring is making waves across industries, delivering tangible results in performance, cost reduction, and customer satisfaction. From improving website conversions to fine-tuning operations and creating personalized customer experiences, the impact is undeniable.

Improving Website Conversion Rates

AI tools are reshaping how businesses approach website performance by directly linking it to revenue. For instance, in April 2024, Dynatrace partnered with a leading budget airline to launch its Opportunity Insights app. This AI-powered tool analyzed millions of user sessions, focusing on performance metrics like Visually Complete and Speed Index, which are critical to reducing exit rates. With a 90% accuracy in predicting user behavior, the airline could prioritize fixes that had the most significant financial impact.

Platforms such as PageTest.AI take this a step further by combining AI-driven A/B testing with real-time monitoring. They track how small performance delays influence user engagement and conversions. The AI continuously tests variables like headlines, calls-to-action, and button text, directing traffic toward the best-performing options. Businesses leveraging AI for optimization report 60% higher revenue growth compared to their peers and respond to shifting consumer trends twice as quickly.

Optimizing Business Operations

AI-powered monitoring has revolutionized operational efficiency across various sectors. In 2023, Quinnox‘s AI-based event intelligence reduced manufacturing mean time to repair (MTTR) by 60%, while Avesha‘s Smart Scaler slashed cloud costs for financial services by up to 70%. Data centers have also benefited, reporting efficiency gains of 20–30% through AI-driven resource optimization.

The adoption of AI in IT operations is yielding significant returns. Currently, 59% of organizations using AI-driven IT solutions have met or exceeded their ROI goals, and 81% of IT leaders plan to increase their AI investments this year. These insights don’t just streamline operations – they also play a crucial role in redefining customer interactions.

Improving Customer Experience

AI is transforming customer experience by enabling personalization at scale. Retailers using AI for targeted campaigns report a 10% to 25% boost in return on ad spend (ROAS). By analyzing real-time user behavior, AI delivers tailored content and product recommendations, moving beyond generic segmentation.

“Personalization drives real-time customer engagement.”

– Aaron Cheris, Partner in Bain & Company’s Retail practice

AI-powered chatbots and virtual assistants further elevate customer satisfaction by offering instant, context-aware support. These systems can detect and address subtle issues, such as minor JavaScript errors or latency spikes, before they impact user experience. Businesses that integrate AI with robust data see 2.5× revenue growth and 3× higher productivity. However, to unlock its full potential, AI must be treated as an evolving asset with continuous feedback loops. Without this approach, 74% of firms fail to realize the full value of their AI investments.

sbb-itb-6e49fcd

Challenges and Best Practices for Implementing AI in Monitoring

AI has transformed real-time monitoring, but putting it into action comes with its own set of hurdles. Organizations often encounter obstacles like data security, prediction accuracy, and system integration that can derail projects before they yield results. Tackling these issues is essential to getting the most out of AI-driven monitoring systems.

Addressing Data Privacy and Security Concerns

AI monitoring systems handle massive amounts of data, much of which may include sensitive information. Data quality issues alone cost organizations $12.9 million annually. Without proper protections, these systems can expose personally identifiable information (PII), risking violations of regulations like GDPR, HIPAA, or CCPA.

To safeguard data, start by securing telemetry with HTTPS/TLS, enforcing role-based access control (RBAC), and anonymizing PII before it’s processed. Tie your monitoring efforts to compliance by incorporating automated checks for biased outputs and maintaining audit trails for every AI decision. This approach fosters accountability and builds trust – a key factor when 83% of enterprises use AI daily, yet only 13% have strong visibility into its usage. These measures don’t just protect sensitive information; they also create the trust needed for AI monitoring to succeed.

Ensuring Accuracy of AI Predictions

AI systems are only as good as the data they’re fed – a concept often summarized as “garbage in, garbage out.” Organizations lacking proper AI observability lose an extra $1.5 million annually due to data downtime. Combat issues like data drift by setting up continuous feedback loops for retraining. For example, Nasdaq’s automated observability reduced data quality issues by 90% and saved $2.7 million. Their success came from using dynamic thresholds to adapt to natural data changes, minimizing false alarms.

“AI systems are probabilistic, as identical inputs can yield different outputs.” – Tredence Editorial Team

Encourage end-users to flag incorrect predictions and feed this data back into retraining cycles. This improves model fairness and accuracy over time. Additionally, integrate “LLM-as-a-judge” methods within your CI/CD pipeline to test prompts and model updates against high-quality datasets before deployment. This proactive approach catches errors early, ensuring your AI delivers reliable predictions that enhance user experiences.

Best Practices for System Integration

Legacy systems and unpredictable AI workloads can complicate integration efforts, often leading to alert fatigue. A phased approach works best: start with low-risk applications like anomaly detection before advancing to more complex tasks like predictive monitoring. Use vendor-neutral frameworks like OpenTelemetry to ensure data portability and avoid being locked into proprietary solutions.

JetBlue offers a great example of success. By incorporating AI observability into their incident response tools, they improved their Net Promoter Score (NPS) by 16 points. Their strategy included creating detailed runbooks for managing common AI-related incidents.

For website optimization, tools like PageTest.AI embed monitoring directly into testing workflows, automatically adjusting compute power during latency spikes. This ensures seamless testing of elements like headlines and CTAs without interruptions.

The effort pays off. AI observability can deliver a 357% ROI over three years, with a payback period of under six months. It can also lead to an 80% reduction in data and AI downtime. These results highlight how treating AI monitoring as a strategic investment can drive both operational efficiency and revenue growth.

Conclusion: The Future of AI in Performance Monitoring

Key Takeaways

AI is revolutionizing real-time performance monitoring by shifting the focus from solving problems after they occur to preventing them before they happen. This predictive prevention approach allows for highly accurate forecasts of potential failures, ensuring they’re addressed before users are affected. Companies that have adopted AI-driven resource allocation have reported efficiency gains of 20% to 30%.

Predictive models are now capable of forecasting user behavior with an impressive 90% accuracy. These advancements have also led to measurable success in retaining users, with optimization efforts delivering 20% more retained sessions than initially expected. Experts highlight the critical link between application performance and overall business success, emphasizing the importance of leveraging AI in this space.

Tools like PageTest.AI are bridging the gap by integrating AI-powered monitoring with testing workflows, helping businesses directly connect design elements to user behavior and conversion rates.

Future Trends in AI Monitoring

Looking ahead, AI monitoring is poised to bring even more advancements. One of the most promising developments is autonomous remediation, where systems not only identify issues but also resolve them automatically by executing predefined remediation playbooks and verifying the results – all without human involvement. Another emerging focus is AI Performance Management (AIPM), which continuously evaluates and fine-tunes AI systems to ensure optimal performance.

The stakes couldn’t be higher. Research shows that over 70% of AI projects fail to deliver lasting value without proper performance management. Gartner predicts that by 2025, half of all generative AI projects will be abandoned during the pilot phase due to challenges like poor data quality, budget overruns, or unclear objectives. However, companies that are “AI-ready” and implement robust monitoring practices see remarkable results, including 2.5× revenue growth and a 3× increase in productivity.

The key to success lies in adopting a strategy of continuous feedback, setting early performance benchmarks, and investing in advanced AI monitoring systems. Businesses that embrace these practices will be better positioned to thrive in an increasingly AI-driven world.

FAQs

How does AI enhance accuracy in real-time performance monitoring?

AI improves real-time performance monitoring by using predictive analytics, anomaly detection, and automatic adjustments. These tools enable systems to recognize patterns, spot irregularities, and react much faster than traditional manual methods, resulting in greater accuracy and quicker decisions.

With automated data analysis and the ability to learn from fresh information, AI allows organizations to track performance metrics instantly while reducing mistakes and delays. This approach delivers more dependable insights and streamlines operations for better overall efficiency.

What challenges arise when implementing AI-powered performance monitoring systems?

Implementing AI-powered performance monitoring systems isn’t without its hurdles. One major issue is ensuring data quality and accuracy. If the data feeding into AI models is incomplete or incorrect, the results can be unreliable, undermining the entire system’s effectiveness.

Another challenge lies in making AI models interpretable and explainable. In industries where understanding how decisions are made is critical – like healthcare or finance – this lack of transparency can be a significant roadblock.

Privacy and security concerns add another layer of complexity. Sensitive data must be safeguarded, and organizations need to comply with regulations such as GDPR and CCPA, which govern how personal data is handled.

On top of that, integrating AI systems with existing infrastructure can be tricky. Achieving smooth compatibility without causing disruptions requires careful planning and execution. Finally, high computational costs for real-time analytics can strain budgets, especially for smaller organizations with limited resources. Overcoming these challenges is essential to fully unlock AI’s potential in performance monitoring.

How does AI improve real-time performance monitoring to enhance customer experience?

AI takes real-time performance monitoring to the next level by delivering practical insights and predictive analytics that allow businesses to tackle potential problems before they disrupt user experiences. By studying user behavior – like clicks, scrolling patterns, and moments when users drop off – AI pinpoints problem areas and fine-tunes essential website features such as headlines, calls-to-action, and checkout flows. The result? Improved user engagement and boosted conversion rates.

On top of that, AI-driven monitoring can spot irregularities, anticipate possible failures, and suggest timely actions to reduce downtime and keep things running smoothly. This shift from reacting to problems to preventing them helps businesses maintain high service standards and enhances customer satisfaction.

Related Blog Posts

say hello to easy Content Testing

try PageTest.AI tool for free

Start making the most of your websites traffic and optimize your content and CTAs.

Related Posts

20-01-2026

20-01-2026

Ian Naylor

Ian Naylor

How Search Intent Impacts Conversions

Aligning content to search intent is the most effective way to turn traffic into customers and reduce bounce rates.

19-01-2026

19-01-2026

Ian Naylor

Ian Naylor

How Responsive Design Boosts Mobile Conversions

Responsive design improves mobile UX, reduces bounce rates, boosts SEO and increases conversions via mobile-first layouts, faster pages, and touch-friendly CTAs.

17-01-2026

17-01-2026

Ian Naylor

Ian Naylor

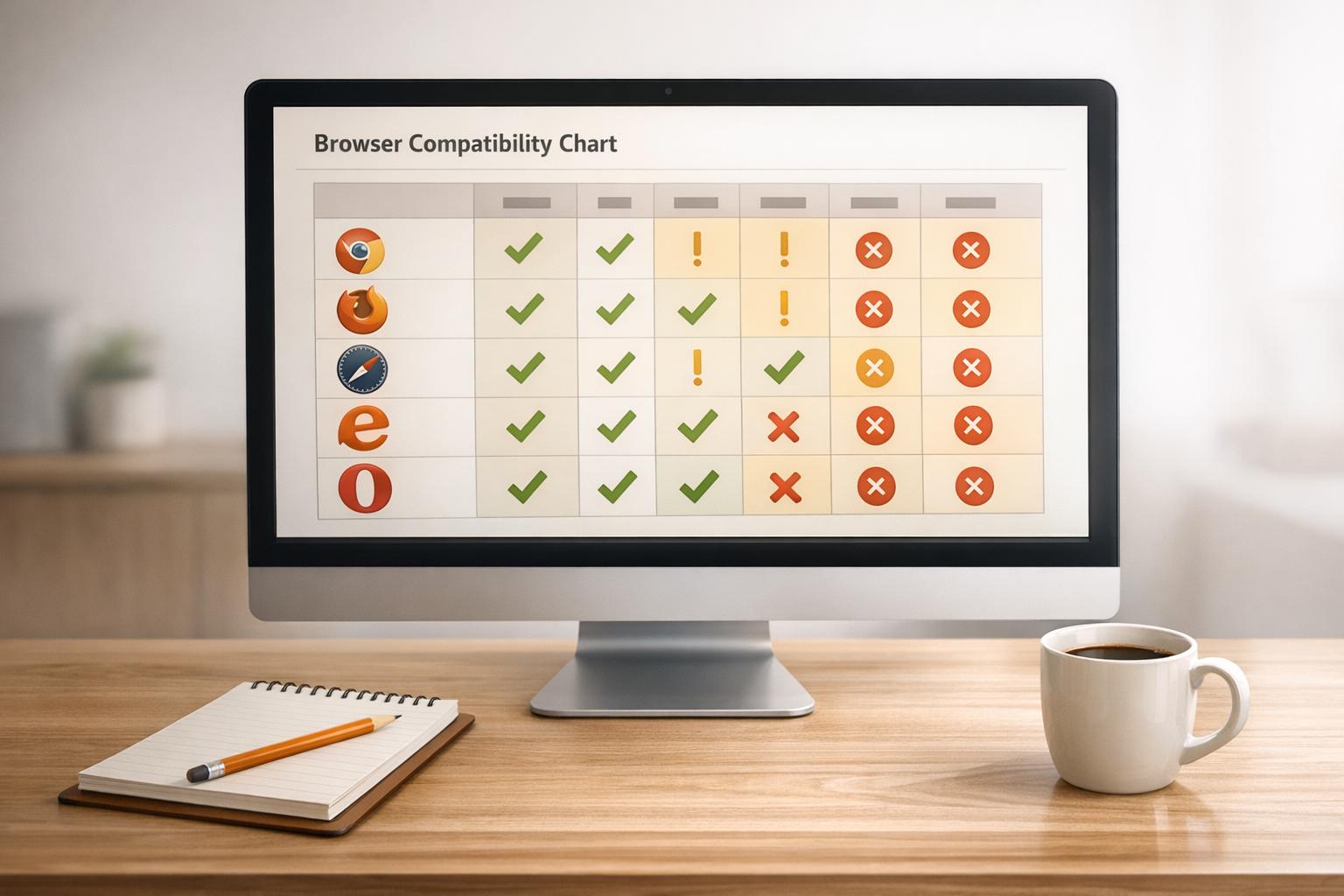

Checklist for Cross-Browser Compatibility Testing

Step-by-step checklist for cross-browser testing: pick target browsers and devices, build a test matrix, validate code, and verify visuals, functionality, performance, and accessibility.